When systems slow down under load, a common reaction is to increase the database connection pool size. The logic seems straightforward: more connections should allow more concurrent queries. In practice, this change often makes latency worse, throughput less stable, and failures more frequent. This article examines the exact mechanisms behind this counterintuitive outcome, explaining how connection pools interact with databases, operating systems, and application threads—and why “more” frequently becomes “too much.”

Tech

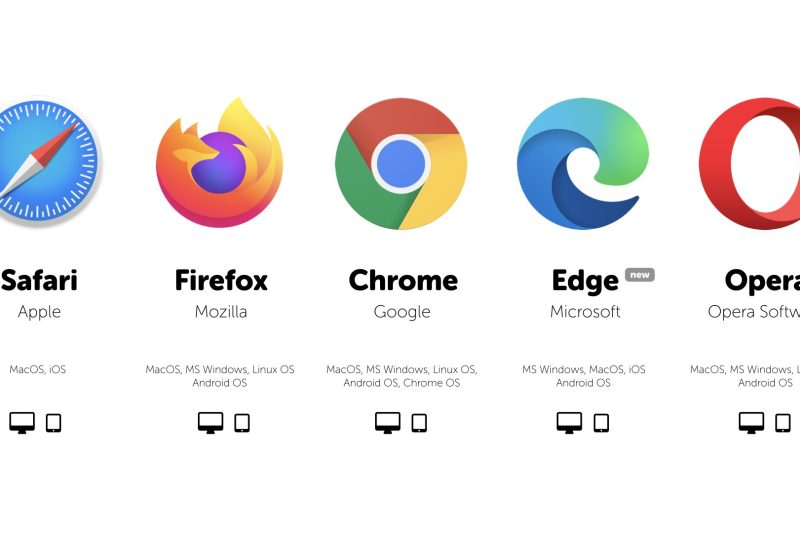

Why HTTP/2 Can Be Slower Than HTTP/1.1 on Unstable Networks

HTTP/2 is widely promoted as a faster replacement for HTTP/1.1, promising multiplexing, header compression, and better performance. Yet in real-world environments—especially mobile networks or unstable connections—HTTP/2 can be noticeably slower. This article focuses on one specific reason: how HTTP/2’s reliance on a single TCP connection interacts poorly with packet loss, and why this negates many of its theoretical advantages.

Why Adding More Database Indexes Can Make Queries Slower Instead of Faster

Indexes are commonly seen as a universal solution to slow database queries. When performance degrades, the instinctive reaction is often to add more indexes. Yet in many real systems, this approach leads to worse performance, higher latency, and unstable behavior. This article dives into the mechanics of how database indexes actually work, why excessive indexing backfires, and how index overload quietly degrades query execution rather than improving it.

Why Your Website Still Feels Slow Even With Caching: A Deep Dive Into Browser and CDN Cache Behavior

Many websites enable caching but still suffer from slow load times, inconsistent performance, or unexpected cache misses. The problem is rarely “cache not enabled,” but rather how different caching layers—browser, CDN, and origin server—interact in practice. This article dives into the mechanics of HTTP caching, explaining why common configurations fail, how cache decisions are actually made, and where performance silently breaks down.